For you reading this, today is Thursday and you already know whether Nvidia crashed and burned or not, whether the official version of the Digital Omnibus is as bad as they said or not, and many other things.

We don’t know, so the intro is about something else.

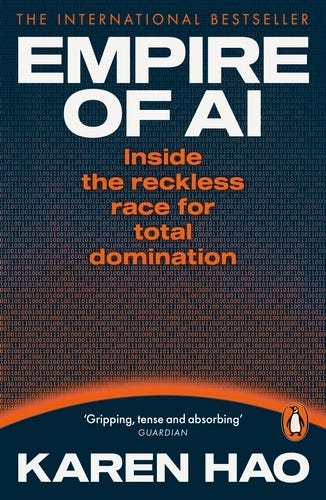

The book “Empire of AI” by Karen Hao goes on sale in Spanish next week. It’s the book of the year, no doubt about it.

It condenses in one place all the dirty laundry of the AI bubble. And I mean all of it, as it touches on every aspect, in more or less detail. But it also ruthlessly puts the bros leading this story in their place — which, heh, is exactly how we like it. Without falling into pure gossip like “Los irresponsables”, it mentions lots of personal details and stories about these billionaires, humanizing them. So to speak.

In this week where Yann Le Cun waved goodbye to none other than Chad Zuck, it’s good to see that these farewells are a constant in the brief history of modern AI.

Elon Musk joined forces (and millions) with a then-unknown Sam Altman to poach talent from BigTech with the golden promise of joining a project — OpenAI — where safety and alignment of AI (and, ahem, AGI when it arrived) would take precedence over the money obsession criticized in DeepMind, Google’s AI division led by another historic figure: Geoffrey Hinton.

That golden, empowering promise, along with a few technical successes and a mix of things like Effective Altruism, paid off and triggered a small talent exodus to OpenAI, helping it skyrocket and charm Microsoft into becoming one of the first party backers of the show.

Soon enough (between the launch of ChatGPT 3.5 and 4.0), it became clear that the only golden thing Sam Altman promised was the money he needed, sought, and found to fund the massive scale of resources required to train the next generation of models. The Amodei brothers, heads of OpenAI’s safety division, packed up and founded Anthropic. A big chunk of Effective Altruism funds (a movement pretty shaken after the FTX debacle) went with them.

Anthropic ended up being a smaller and more profitable version of OpenAI, without major advantages in terms of safety and alignment, by the way.

After the short-lived coup at OpenAI and the return of a strengthened Sam Altman, Ilya Sutskever had to leave and start his own company.

This week, Yann Le Cun waves goodbye to Zuckerberg — no one knows if it’s because he backed the wrong horse or simply because of Zuckerberg.

Place your bets now on who the next AI guru will be to pack their bags and launch their own gig.

You’re reading ZERO PARTY DATA. The newsletter on tech news from the perspective of data protection law and AI by Jorge García Herrero and Darío López Rincón.

In the spare moments this newsletter leaves us, we like to solve messy problems in personal data protection and artificial intelligence. If you’ve got one of those, give us a little wave. Or contact us by email at jgh(at)jorgegarciaherrero.com

Thanks for reading Zero Party Data! Sign up!

🗞️News of the Data-world 🌍

.- Researchers at UCL studied the potential benefits of replacing the current (and awful) social buttons “like” and “share” with “trust” / “distrust”, yielding more than promising results: misinformation and fake news virality decreases, and the overall quality of the social feed improves.

Of course, when this study was shared on social media, everyone pointed out the obvious: that the tribes for and against each piece of content would formally or informally organize to “hack” the system and vote as trustworthy what isn’t — and vice versa. You can see this with the absurdly high or low IMDb ratings of any movie or series with a remotely polarizing plot. Doesn’t matter: all efforts in this direction should be applauded, and we do so from the modesty of this newsletter.

.- Microsoft AI Teams will reveal your location to your boss by interpreting little clues like the Wi-Fi network you connect to. Your little heart says “what the hell”. Microsoft says the “feature” is opt-in. Clara Hawking says yes, but that opt-in should be decided by the worker, not the boss — which is how the green field, blue logo, red alert idea company set it up.

Luis Alberto Montezuma comments on the Dutch authority’s intervention

Summary document from the Dutch DPA on the public consultation process on AI systems for social scoring purposes. Via San Luis Montezuma.

.- If you want your AI-powered cyberattack to fly under the radar, just fragment it into simple tasks and assign them to different models, so none of them sees “the complete picture” — or so Lukasz here tells us.

.- Amazon has a good week thanks to the DSA/DMA duo. The General Court of the EU said nope to the appeal against its designation as a DSA VLOP, and now the European Commission intends to label AWS a DMA gatekeeper (which Amazon core already is).

Do they do it out of respect for the typical corporatism of these professional groups? What do you think, Baldomero?

Do they do it to avoid users hurting themselves by following outputs that are usually well-documented but poorly directed, due to (logically) lack of context? Take a guess, Pichurri.

Nope, they just prohibit it via T&Cs with the sole purpose (and remember: this isn’t automatically valid, at least not in the EU — thanks to all those horrible and convoluted rules we now need to repeal).

📄High density doc for data junkies and papers of the week

.- We finally have the Digital Omnibus draft published. At least to check if the leaked version was accurate or not. Either way, still a long way to go.

.- This one by the legendary Carl Susstein, describing the limits of current AI models. It deserves at least a quick spin through NotebookLM. At least.

.- This other one by Dave Michels, Christopher Millard, Ian Walden, and Ulrich Wuermeling, about the cracks in the so-called entente cordiale — so to speak — between the EU and the US that is the DPF, and that perfectly complements the Masters of Privacy episode by Sergio Maldonado with Gloria Gonzalez Fuster on the (so far) dismissal of Latombe’s actions against the DPF.

.- This one, signed by a bunch of folks at Amazon Web Services, proposes a solution (complicated, like all truly real solutions, but promising) to the security holes in Agentic AI. I’m linking Stuart Winter-Tear’s comment here, because I know very few will actually read the paper.

.- Watermarks in the Sand: Impossibility of Strong Watermarking for Generative Models — I think the title leaves little mystery about its content.

📎Useful tools

This EFF blog post about the encryption (or not) of backup copies of our (gulp, chats) done by the most-used messaging apps. This topic suddenly gained relevance with the trial of the Attorney General. But it’s very relevant “in and of itself”.

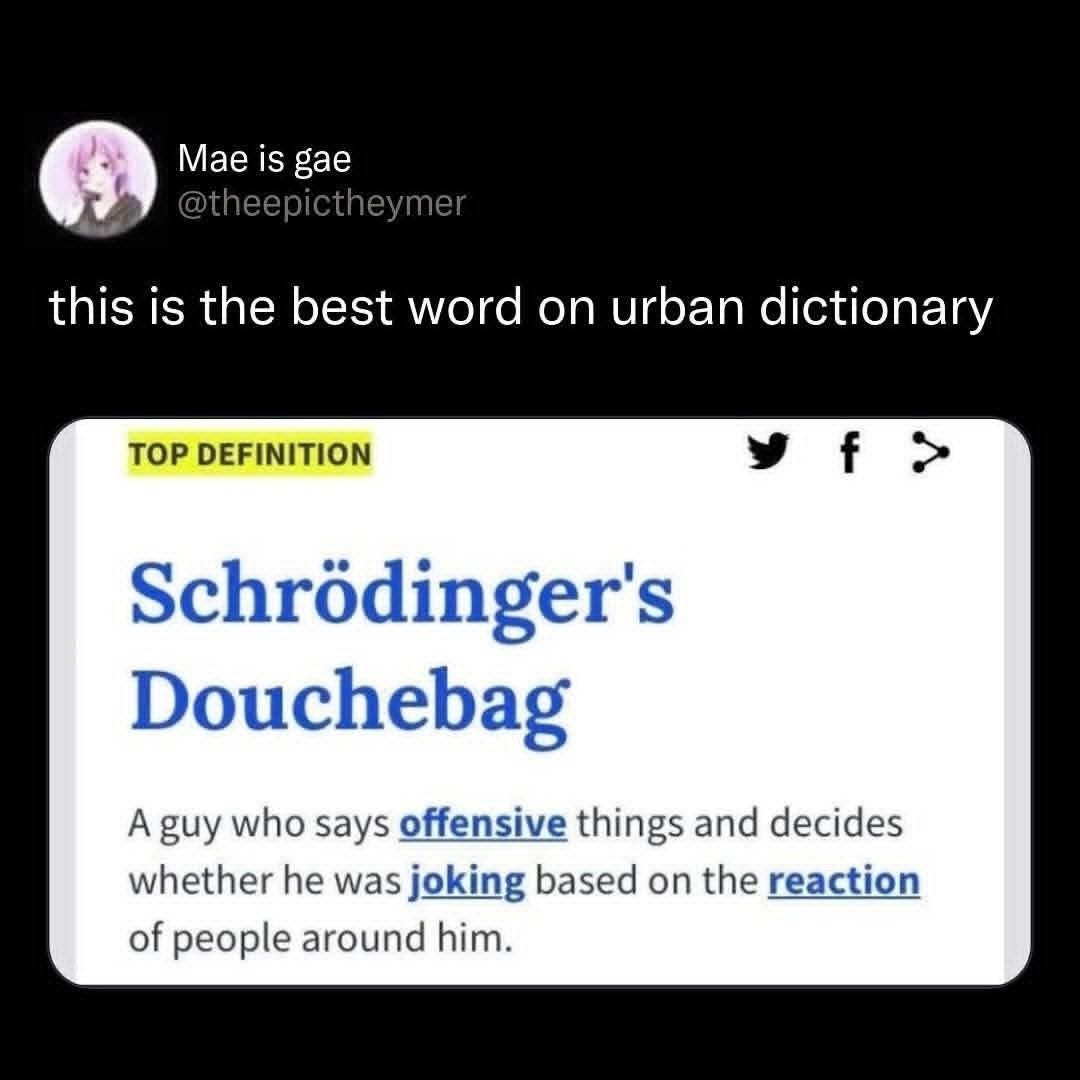

💀Death by Meme🤣

🤖NoRobots.txt or The AI Stuff

.- These 50 minutes with Snowden (not the whistleblower, the other one — the cynefin framework guy) are pure gold.

🙄 Da-Tadum-bass

So proactive with cat privacy, and so unresponsive to cat’s human slaves.

If you think someone might like—or even find this newsletter useful—feel free to forward it.

If you miss any document, comment, or bit of nonsense that clearly should have been included in this week’s Zero Party Data, write to us or leave a comment and we’ll consider it for the next edition.