Late November, spanish AEPD published its internal policy on the use of generative Artificial Intelligence. They tell us they’re going to use it everywhere, but here we wondered whether it would hold up compared to the revised guidelines that the EDPS imposes on European institutions regarding GDPR compliance of such systems. The EDPS document is more technical, and the AEPD one is more focused on governance, but it’s worth comparing them.

Of course, the ultimate fun is having AI itself assess the proper use of AI, with supervised and reviewed AI, to keep it from going off the rails.

We asked Claude, and he concluded that the AEPD is doing quite well: 85% compatibility, even though the EDPS document is not one of the sources the AEPD cites in its bibliography (though it does consider two specific EDPS publications from its Tech Dispatch division: Human Oversight of Automated Decision-Making and Large language models (LLM)).

Time will tell, but it’s still worth appreciating the AEPD’s transparency about its intended use of a technology that can easily spiral out of control.

Principles or elements from the EDPS doc that the AEPD applies:

Clear purpose definition – ✅ Implemented – Each use case requires extended description and impact analysis.

Roles and responsibilities ✅ Implemented – Defined roles for Data Controller in the organization, functional and technical leads, DPO, AI Officer.

Impact Assessment (DPIA) ✅ Implemented – DPIA considered when required under GDPR.

Data minimization ✅ Implemented – Specific reference to minimization and restricted access configuration.

Data accuracy ✅ Implemented – Design policy includes result verification and validation.

Transparency ✅ Implemented – Transparency and explainability policy: documentation, traceability, user-visible information.

Data security ✅ Implemented – Cybersecurity policy: ENS categorization, specific controls for generative AI.

Human oversight ✅ Implemented – No automated decisions without effective human supervision.

Processing records ✅ Implemented – EDPS refers to GDPR-alternative RAT of EU institutions (Regulation 2018/1725), but processing records are addressed.

Individual rights ✅ Implemented – Mentioned, though not thoroughly developed.

Structured governance ✅ Implemented – Governance model with defined roles and procedures.

Partially referenced by AEPD:

Development vs. deployment classification ⚠️Partial – AEPD distinguishes phases, but not with EDPS terminology.

Web scraping ⚠️Not explicitly mentioned – Could fall under general trend/pattern analysis of open information (possibly analyzing this newsletter).

Anonymous models ⚠️Not in-depth – AEPD doesn’t analyze criteria to deem models anonymous, but notes the importance of anonymization.

Consent as legal basis ⚠️Different approach – EDPS strongly advises against; AEPD doesn’t address legal basis.

International transfers ⚠️Not mentioned – EDPS devotes a section; AEPD just references it as an area where AI may apply: “requests for international transfer agreements.”

Right to rectification/erasure ⚠️Underdeveloped – EDPS delves into technical hurdles; AEPD only briefly mentions it.

Bias and discrimination ✅/⚠️Identified as risk – AEPD flags it as a threat, but less developed than EDPS.

Hallucinations ⚠️Indirect mention – AEPD addresses as “system inefficiency.”

EDPS points not covered by AEPD:

Specific guidance on outputs with personal data: EDPS differentiates responsibilities depending on the data source.

Specific anonymization techniques in training data: EDPS mentions differential privacy, while AEPD references it more generally to “reduce memorization of personal data in the model.”

Red teaming: Mentioned by EDPS, but not by AEPD. Isabel Barberá, get ready.

Model inversion attacks: EDPS lists specific IAG threats; AEPD is more generic.

Prompt injection and jailbreaks: Explicitly mentioned by EDPS; AEPD glosses over with indirect references in Spanish.

RAG (Retrieval-augmented generation) and specific risks: Both mention, but EDPS is more detailed. AEPD focuses on supporting pseudonymization and anonymization, and notes these RAGs could streamline inquiry channels, youth and DPO. The typical agents now showing up in forms to answer easy database-backed queries almost autonomously.

If we had to point out the weakest part, it would undoubtedly be the hardest today: that of effective human supervision. The AEPD policy includes strong statements excluding automated decisions under Article 22 GDPR and “implementing IAG systems in AEPD processes in such a way that there’s no room for doubt in the application of the previous paragraph.”

But that, my dear, is cheap. One expected the AEPD to take the plunge like we are doing here: to specify concrete measures in policies not only to ensure the process doesn’t end without human supervision, but also that an independent third party can verify its effectiveness—that the supervisor can assess and mitigate both the system’s and their own biases when using the tool—and that everything is logged, and the information can be retrieved to prove and improve the system’s performance.

That said, the Agency deserves major credit for doing what, so far, very few have dared to do.

Needless to say, Claude didn’t write this last part. It shows, right? Right?

You are reading ZERO PARTY DATA. The newsletter about current affairs and tech law by Jorge García Herrero and Darío López Rincón.

In the spare time left by this newsletter, we like to solve tricky situations in personal data protection and artificial intelligence. If you have any of those, give us a wave. Or contact us by email at jgh(at)jorgegarciaherrero.com

Thank you for reading Zero Party Data! Sign up!

🗞️News of the Data-world 🌍

.- From the ICO they’re going to investigate certain mobile games for a while (mobile gaming), and about time. Authorities are starting to realize that this segment of video games ranks #1 in use of all sorts of addictive and deceptive patterns. The Free to Play business model thrives on pure microtransactions.

They’ll be focusing heavily on the near-nonexistent age verification, but wait until they discover dynamic difficulty adjustment—aka boosting or lowering the difficulty based on whether you seem frustrated enough to rage quit or likely to pay to pass the level. The Brits would easily detect this kind of processing with dubious legitimacy, because back in 2019, they already nailed one CEO in a hit-and-run parliamentary hearing.

.- The CJEU just dropped a Fashion ID 2.0 with its Russmedia Digital (C-492/23) ruling. Same core, same conclusion: the entity behind a marketplace is responsible for the processing of personal data contained in ads generated and published by its users.

Specifically, it emphasizes appropriate measures to check—before publication—those that might contain sensitive data and ensure proper legal basis for their use (explicitly mentioning the most likely exception under Article 9: explicit consent). Link to the press release, the ruling, and the video published by the CJEU explaining the decision briefly (first time we see them post something like this on LinkedIn).

More details on this major CJEU ruling in the special newsletter we released last Friday: Russmedia ruling CJEU C-492/23

.- The first big slap from the EC over the DSA goes to……..Elmo. It couldn’t have been any other way with the guru who blew up the compliance and data protection team, right after dropping off somewhere the sink he carried as an accessory.

120 million for the deceptive pattern of the verification check for users (maybe that’s why people were suddenly saying their classic verification was restored without paying), lack of transparency and accessibility in the ad repository ecosystem, design-by-default friction to control ads, and failure to provide researchers with access to public data.

Now comes X’s tantrum within 60 days and 90 days to present measures and an action plan to remedy things.

.- The AEPD launches its lab with lots of stuff and among them… a blog. Did you know you can submit posts for publication? Well, now you do: these are the editorial policy rules.

📄Papers of the week

.- Ultra fresh from yesterday, just made it into this edition: a paper-evaluation on GPC or Global Privacy Signal (roughly explained: the “Do Not Track” standard backed by the European Commission, so your browser can automatically say yes or no to cookie use). The fun part? It’s signed by something like the Avengers of European privacy: none other than Sebastian Zimmeck, Harshvardhan Pandit, Frederik Zuiderveen Borgesius, Cristiana Santos, Konrad Kollnig, and Robin Berjon. Not all who matter are there, but all who are there matter.

Two papers, both by Philippe Hacker:

.- Can you hurt yourself by modifying the core purposes of a general-purpose AI model? The detailed answer to this and many other questions here: The regulation of fine-tuning: Federated compliance for modified general-purpose AI models.

.- A critical approach to the Digital Omnibus, but much more open than NOYB’s and all those experts who don’t work and have time to write about legislative proposals, here: (again Hacker and Robert Killian) “Simplifying” European AI Regulation: An Evidence-based Study.

📖 High density doc for data junkies ☕️

.- Adrián Todolí tells us that the Supreme Court has ruled that a cyberattack can be considered force majeure to justify a furlough (ERTE). The event must be unforeseeable, unavoidable, and beyond the company’s control. Therefore, only in extreme cases where the company demonstrates diligence and no negligence, a cyberattack could be considered as such.

.- The user-friendly compilation by Noyb on the Omnibus made after the leaked draft came out. They promise more stuff, but it’s not a bad document to have on hand with the main changes, before and after, and an attempt to show how those changes might land in the real world. Expect tons of similar compilations.

💀Death by Meme🤣

🤖NoRobots.txt or The AI Stuff

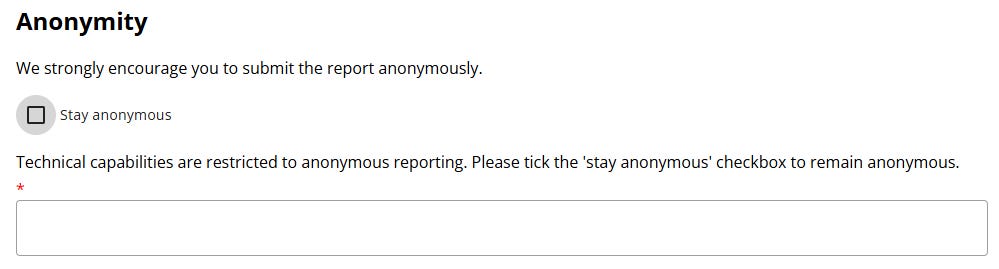

.- The new European AI Office has gifted us a whistleblower tool for reporting any violations we see under the AI Act. Very similar to the whistleblowing forms you’ve surely had to review and tweak (sometimes even with a known vendor behind it): both in its identified version and its anonymous submission version.

If the point is anonymous reporting just because, why can you uncheck the box? And then you get a phrase that sounds more like a liability waiver than a kind request to check it.

In the confidentiality policy, it says over and over that no data is collected, but couldn’t there be exceptional cases where it must be requested? The same issue considered in any whistleblower channel.

.- They also give us another interactive form/tool with minimal technical development (AI Act Compliance Checker), to help determine the risk level and main obligations applicable under the AI Act, depending on whether you’re an LLM, provider, general use, etc. Interesting so you don’t always have to go to the full text.

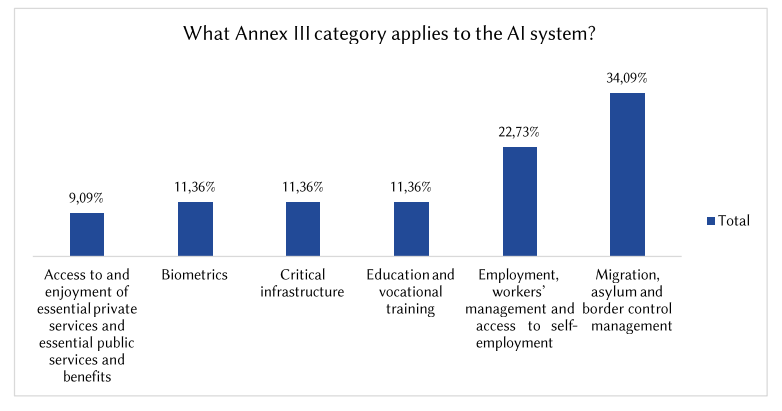

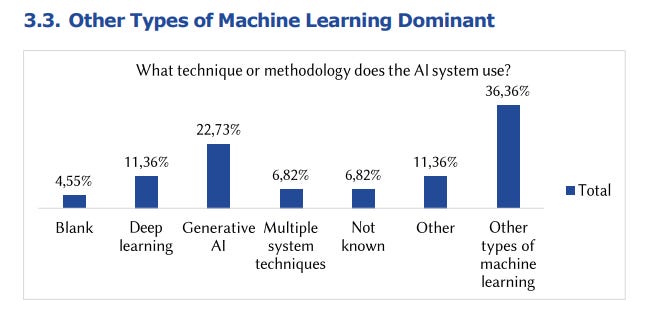

.- The EDPS has presented a mapping of AI use in the public sector. It’s not the big document where you’ll learn technical stuff, but it reveals interesting data. The juiciest parts:

The second most common use is for HR management and selection, even though the EDPS expected the top use to be for migration control and enforcement systems.

The most used category is “other machine learning techniques,” without giving more detail. Second place goes to generative AI itself.

🙄 Da-Tadum-bass

Haven’t you yet run into the typical top-tier provider overloaded with AI certifications? Everything’s wonderful... until an authority shows up to make you a TikTok.

Great movie The Death of Stalin, by the way.

If you think someone might like—or even find this newsletter useful—feel free to forward it.

If you miss any document, comment, or bit of nonsense that clearly should have been included in this week’s Zero Party Data, write to us or leave a comment and we’ll consider it for the next edition.