#44 AI promised us the Moon, but all we'll get is... more ads

Like Facebook, only worse

Sam Altman announces that ChatGPT will include ads.

Hardly surprising, given OpenAI’s CAPEX and cashflow.

OpenAI, the company that was going to democratize artificial intelligence for the benefit of all humanity, has soon discovered that this same humanity is much more profitable if you can slip in banners between prompt and output.

Welcome to the future, pal. The planet’s brightest talent created systems that can write poetry, solve differential equations, and simulate philosophical conversations—all so that in the end they recommend you Nivea while planning your vacation.

But beyond highlighting yet another case of enshittification—that process by which a digital service is born useful, grows addictive, and dies unbearable—in Big Tech (the fastest in history, I’d say), I wonder...

Whose fault is it?

Sam Altman’s?

This guy is not known for his human qualities nor for his reliability as a person, brother, executive or prophet... (you just need to read “The Empire of AI” by Karen Hao, something you would have already done if you had an ounce of self-respect)

But he sure has raised money—plenty of it...

Fierce AI competition?

Elon Musk and Sam Altman (and Dario Amodei, and others) created OpenAI so that Google wouldn’t monopolize the development of the promised AGI (general AI that nobody knows what it is or when it’ll arrive).

Dario Amodei created Anthropic because he was fed up with Sam Altman.

Elon Musk created xAI because he was fed up with Sam Altman.

OpenAI’s board kicked out Sam Altman because...

But the ball is between OpenAI, Google, Anthropic, China, and far behind, xAI desperately trying to get relevance by posting bikinis. Meta and Apple have fallen behind.

Ours, the users’?

Only 5% of users pay.

Companies are indeed paying for AI, partly for compliance, partly out of fear their secrets and data might get stolen, and partly because of FOMO.

Individuals, for the most part, are just goofing around (bikinis, Ghibli or Simpson-style drawings, recipes, etc.) barely scratching the surface of what can be achieved.

Paid AI’s penetration into daily life is ridiculously low.

Not because the tech isn’t useful, but because we’ve been conditioned for decades to believe everything digital should be free. Google got us used to searching for free. Facebook convinced us social networks were a human right magically funded by the ether. And now, when something genuinely revolutionary appears, our Pavlovian response is: “Interesting, but is there a free version?”

And meanwhile, these Elon types are slipping full guarantees into the PRIVACY POLICIES of their AI services:

Other than that, some weeks it’s best not to think too much about what’s going on. We’re still in January 2026, but we can’t escape the sad burning dog meme.Por lo demás, hay semanas que es mejor no pensar mucho en lo que está pasando. Seguimos en enero de 2026, pero no salimos del triste meme de perro en llamas.

You’re reading ZERO PARTY DATA, the newsletter on current affairs and tech law by Jorge García Herrero and Darío López Rincón.

In the spare time this newsletter leaves us, we enjoy solving tricky situations related to personal data protection and artificial intelligence regulation. If you’ve got one of those, give us a wave. Or contact us by email at jgh(at)jorgegarciaherrero.com.

Thanks for reading Zero Party Data! Sign up!

🗞️News from the Data World 🌍

.- A curious news item that pops up from time to time: “the German army can’t get new recruits because of the GDPR”. In reality, it’s a concatenation of factors: legal limitations on capturing data from potential recruits while they’re minors, on segmentation and profiling, on excessive data gathering… not to mention the lack of interest from younger generations in becoming cannon fodder.

.- In some leading 3D animated film, non-existent analog animators’ fingerprints were “included” on animated characters. Well, this report points out the fingerprints of BigTech in each of the modifications proposed by the Commission for the Digital Omnibus in the GDPR, AI Act, Data Act, and others. As interesting as it is unsurprising. Again, my opinion on the Omnibus is that the proposals ignore several of the real issues in the regulations (international transfers, anyone?) to brazenly attack the foundations of citizens’ rights.

.- Researchers from the Computer Security and Industrial Cryptography group discovered that Google’s FastPair protocol has critical flaws in 17 audio accessories from ten manufacturers (Sony, Jabra, JBL, Marshall, Xiaomi, Nothing, OnePlus, Soundcore, Logitech, and Google). With the WhisperPair technique, any attacker within Bluetooth range can silently pair with already connected headphones or speakers, take control of the microphone, inject audio, or track location via the FindHub feature. All of this without any user interaction. Nice. Content by Sayon Duttagupta and Seppe Wyns.

.- Security breach news? This time at PcComponentes. The official statement clarifies it was actually a bit of “credential stuffing.”

I will try and explain it in “three easy steps”:

1.- Collect login and password records from other dumps on the deep web (previous breaches that did happen).

2.- Check if the user uses that password on other services, like PcComponentes. If yes, you create a small database of credentials that work on this site, even if you haven’t gained access.

3.- Notify them that you’ve accessed their account and try to extort money as if you actually had, putting that database on the table as if it were a tiny fraction of what you “achieved” with your—ha—fictitious breach.

Sure, those credentials allow access, but no: my dear, there was no breach.

📖 High density docs for data junkies ☕️

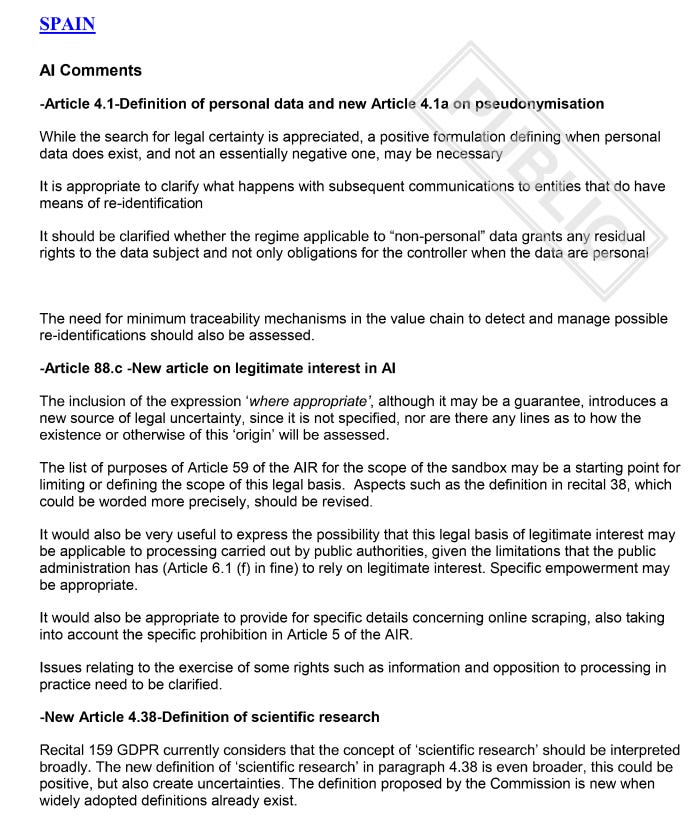

.- Thanks to Luis Montezuma, we’ve reached the compilation doc of comments from many countries/delegations/authorities about the Digital Omnibus. If you’re thinking of dumping this 71-page document into the very efficient Notebook LLM for a summary, sorry to say it won’t work. In the classic bureaucratic move of scanning to PDF, they’ve created a sort of anti-AI filter. Time to read it the old-fashioned way, or look for another way around.

Here’s a snapshot of part of Spain’s comments. Starting with the new definition of personal data.

-. The previous one would have ended with the punchline that we all swallow this appetizer until the real meal arrives, but the long-awaited joint document from the EDPB and EDPS came out yesterday. At least, in the section focused on the RIA amendment affecting data. Don’t expect to find anything about the new possible definition of personal data, but yes to the authorities being protective. Alejandro Prieto Carbajo explains it very clearly here, but basically, simplifying must not mean reducing guarantees. And a system from Annex III should still be included in the register, even if the provider considers it not high risk.

Privacy Company conducted a DPIA for the Chief Information Office of the Dutch Government—the national videoconferencing platform based on Cisco Webex and managed by the Dutch Tax Agency. The link, again, courtesy of Saint Luis Montezuma.

.- The Grok/X.com BikiniGate in light of the RIA: Laura Caroli, Laura Lazaro Cabrera, and David Evan Harris contribute a systematic analysis: Grok as a systemic risk model, the possibility that individual users might be affected, the evolution of the general-purpose AI code of conduct (signed by X.ai)...

.- The CNIL explores the viability of a user giving consent for cookies and other trackers simultaneously on multiple devices, analyzing how ePrivacy and the GDPR address this practice. The technical requirements to link consent to a persistent digital identity are described, and the risks of fragmented consent when using different browsers or operating systems are evaluated.

🤖NoRobots.txt ot AI Stuff

.- MIT CSAIL researchers Alex Shipps, Gabriel Grand, Jacob Andreas, Joshua Tenenbaum, Vikash Mansinghka, Alex Lew, Alane Suhr present DisCIPL (Distributional Constraints by Inference Programming with Language Models), a framework where a large LLM acts as a “planner” and distributes execution among smaller models, Oompa Loompa style. In benchmarks against GPT‑4o, Llama‑3.2‑1B alone, and reasoner 01, DisCIPL achieves similar accuracy to o1 but with (look at this) 40% less reasoning length and 80% cost savings, thanks to followers being 1,000–10,000 times cheaper per token. It also outperforms GPT‑4o in real tasks like shopping lists with a budget, travel itineraries, and grant proposals under strict constraints. The authors highlight the potential to scale the number of follower models and to use the same architecture both as leader and follower, opening the door to self-formalized mathematical reasoning and to fuzzy preferences that can’t be codified into strict rules.

🔗Useful Tools

.- A quiz that lets you test whether you can spot something generated by AI: image, video, and synthetic voice. Similar to the one I shared a while ago on dark patterns, but with the current hot technique.

.- The new CJEU portal is a pain. Used to locating rulings by article and paragraph? I have bad news: this no longer works. But Max Schrems has shared a nifty trick: The process is: (1) go to the Opinions and decisions tab excluding Cases and all documents (2) identify the CELEX number of the European regulation (not the regular number like 2016/679 for the GDPR); (3) add a dash at the end of that CELEX number; (4) include the article using the platform’s notation, e.g. A07 for Article 7; and (5) launch the search. Daniela T. discovered this gem.

💀Death by Meme🤣

📄The paper of the week

.- Extracting books from production language models by Ahmed Ahmed, A. Feder Coopery, Sanmi Koyejo, and Percy Liang

“Many unresolved legal questions about LLMs and copyright focus on memorization: whether specific training datasets have been encoded in the model’s weights during training, and whether those memorized data can be extracted from model outputs. Although many believe LLMs do not memorize, recent work shows that substantial amounts of copyright-protected text can be extracted from open-weights models. However, it remained unclear if similar extraction was possible for production LLMs, given the security measures implemented in these systems. We investigate this issue in Claude 3.7 Sonnet, GPT-4.1, Gemini 2.5 Pro, and Grok 3.”

The answer: From Gemini 2.5 Pro and Grok 3—without major tricks or spells—76.8% and 70.3%, respectively, of Harry Potter and the Philosopher’s Stone were extracted. In some cases, almost verbatim books (e.g., nv-recall = 95.8%) were extracted from a jailbroken version of Claude 3.7 Sonnet.

And this is just the paper’s summary.

🙄 Da - Ta - Dum Bass!!!

If you think someone might like—or even find this newsletter useful—feel free to forward it.

If you miss any document, comment, or bit of nonsense that clearly should have been included in this week’s Zero Party Data, write to us or leave a comment and we’ll consider it for the next edition.

The enshittification trajectory from useful to ad-riddled is brutal here, especially given OpenAI's original nonprofit framing. The 5% paid conversion statistic is damning becuase it reveals we've been trained to expect digital miracles for free while burning through billions in inference costs. Watched this same pattern play out with early social media, where every "revolutionary platform" eventually finds its way to the same advertising model that everyone claims to hate.