#47 Does banning really achieve anything, or just make it look like you’re doing something?

Two examples: the ban on making sexual deepfakes with AI and the ban on social media access for minors under 16.

This edition goes way beyond what fits in an email, but trust us: the occasion is worth it.

Last Thursday the European Parliament proposed including, as a prohibited purpose (Art. 5 AI Act), the use of AI to generate sexualized video, image, or audio content relating to natural persons (sexual deepfakes, basically).

Would this measure be effective?

Checkmate to the deepfake? Or another example of virtue-signaling—like banning social networks for under-whatever-age—that will come to nothing?

IMHO, the latter:

In theory, this reform would mean:

Fines of up to 35 million euros or 7% of global turnover, the most severe in the Regulation. A huge change compared to the current approach: a deepfake would only have to comply with the transparency and labeling obligations of Article 50.

Also, the ban would apply to all AI systems regardless of when they were put into production: there would be no transitional period and no possible “grandfathering”.

But, as usually happens, whoever sniffed out this proposal has good intentions, but less street smarts than Venice:

AI models running “locally”

The vast majority of sexual deepfakes are not generated through commercial APIs (which already have safeguards, except those led by “crazy uncle types who live to annoy” like Elon), but from open-source models run locally.

So:

.- From the model provider’s perspective: open-source model developers (for example, the Hugging Face community) will argue—and quite rightly—that they make a general-purpose model available, not a system designed specifically to generate sexual deepfakes.

.- From the perspective of Domestic Users/Deployers: the Art. 5 ban would not apply to the use of these models in the domestic sphere (check the definition of “deployer”).

Ah, the irony!

The funny thing is that commercial actors like xAI/Grok, who are the most visible and the ones who caused the scandal, are ferociously cancellable (or slap-worthy, if we’re feeling dramatic) under the existing legal framework (DSA).

And all that, sparing ourselves the greater or lesser disruption this reform would cause within the industry.

As we’ve been saying throughout this turbulent 2026, we have to start by applying what we already have, and then we’ll see what else we pull out of the hat.

For our part, last Tuesday we pointed out a way to “actively mitigate the urge to generate” non-consensual sexual deepfakes that can be applied tomorrow.

You’re reading ZERO PARTY DATA, the newsletter on current affairs and tech law by Jorge García Herrero and Darío López Rincón.

In the spare time this newsletter leaves us, we enjoy solving tricky situations related to personal data protection and artificial intelligence regulation. If you’ve got one of those, give us a wave. Or contact us by email at jgh(at)jorgegarciaherrero.com.

Thanks for reading Zero Party Data! Sign up!

🗞️News from the Data World 🌍

.- Banning social networks for minors under x years old leads to things like the Discord stuff, our second example:

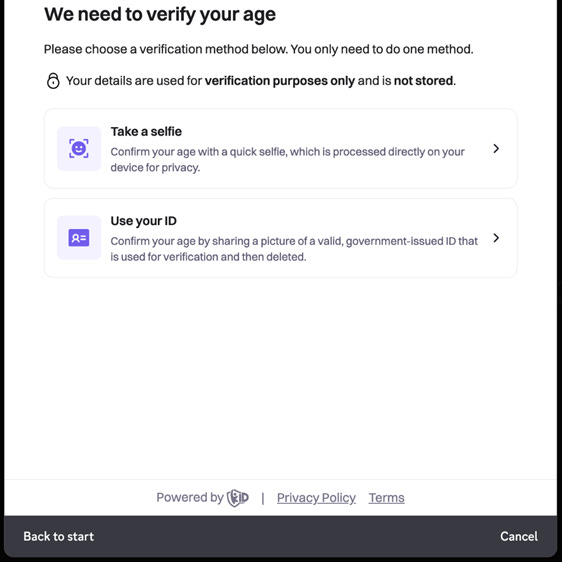

It had to happen with so many politicians banging on about it: Discord jumps into the ring by requiring age verification for everyone starting in March. That Discord that got hit with an €800,000 fine from the CNIL for violating 5, 13, 28, 32 and going through life without a PIA.

What do we have?: Show your face for facial estimation, or ID.

-Everyone is a minor by default: it looks like they’ve learned from their mistakes here, and it’s not a bad practice to start from the assumption that everyone has x things disabled. Also the point about making it easy to appeal and try again.

-Options: scanning your ID or your face. It’s true they’ve taken the side road of facial estimation, but we’d still have to see if they convince people they can escape being biometric data under Art. 9 (<em>because of the nuance that the purpose isn’t unique identification, but “just” measuring the wrinkle to see if you’re young-old</em>). Although the point that they say they may end up forcing you to use both if there are doubts works against them on proportionality:

Then there’s the thing the AEPD likes: first assessing whether there’s truly a need. If the minimum age on Discord is 14, does mandatory ID sit right there and you offer it as an option? Don’t you have a less invasive alternative that makes the need fall on facial? Understanding that ID/DNI processing is limited to avoid undue risks, even if they don’t say anything (<em>and without keeping a copy, because hotels are being watched for their own thing</em>).

-Lawful basis. Who knows, but consent for sure not. The super-cool FAQs say nothing, and the privacy policy even less: “Additionally, we will need your date of birth and, in some cases, we will request additional information to verify your age.” Even if they’re an obligated entity under the DSA, it doesn’t stipulate an obligation to have an age verification system. By contract, I hope they don’t try it, so it’ll be a legitimate interest that tries to use the DSA as a legitimation base (and the question mark of whether there are real rights to object here, or we play at saying it’s overriding/denied). Since they say nothing, let’s hope it’s more worked out than the PIA from the 800k sanction.

Watch out with the DSA, which can almost be a demerit for Discord. We forget it, but the mythical 28 reminds: “Compliance with the obligations laid down in this Article shall not require providers of online platforms to process additional personal data in order to assess whether the recipient of the service is a minor.”

-Retention: one of the few things they clarify, even if without meaning to express it as such. The device storage point isn’t bad either, but it’s almost the only thing they offer as relevant data protection information. Full-on Art. 13:

-DPIA and real information?: I’m very afraid the image that illustrates this post is what we’re going to get. That classic attempt by a controller to dump everything onto the processor that swallows it because it won’t be considered as such. Hands up if you think here there’s neither processor nor joint controller: just independent controllers, period.

-Last-minute: after they got piled on, Discord clarifies the issue. They make it clearer that most adults won’t need to verify anything for the use most people make of it, but they add a point of doubt: “For the majority of adult users, we will be able to confirm your age group using information we already have. We use age prediction to determine, with high confidence, when a user is an adult. This allows many adults to access age-appropriate features without completing an explicit age check.”. Could there be another undeclared alternative that makes the others unnecessary? Please, more declassification of information.

One last reflection that ties directly into the paper of the week: leaving aside BigTech abuses, which nobody questions: Has anyone stopped to think about whether we’re effectively protecting our kids, or just repainting a house—a regulatory model—that has become obsolete? I repeat: check the paper of the week.

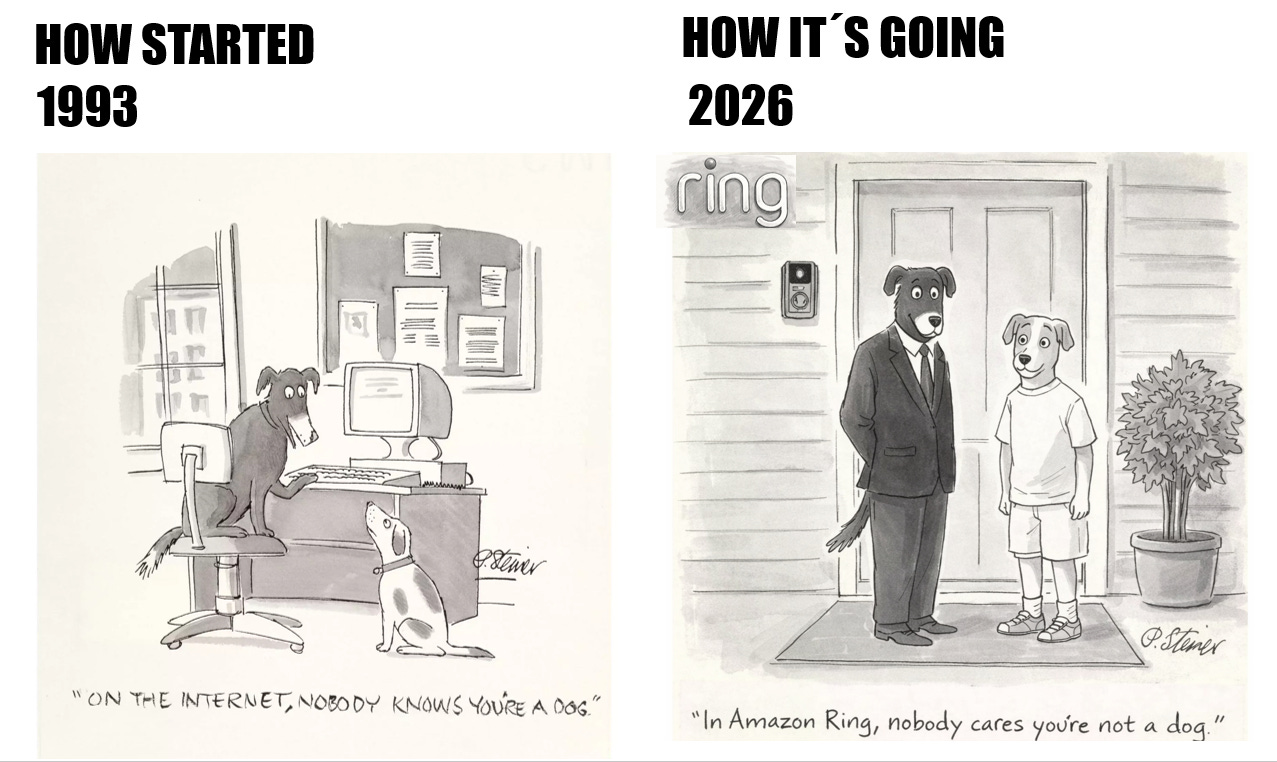

.- Amazon Ring’s SuperBowl puppy ad left any data person with a shred of self-respect speechless. The video itself closes this edition of the newsletter as the “Da-Ta-dum-bass”, but we thought it appropriate to contextualize it with another iconic tech vignette.

.- The TikTok thing: active week as you can see, in terms of regulatory friction with BigTech: a preliminary decision by the European Commission finding that TikTok has infringed the Digital Services Act due to the addictive design of its recommendation systems. NOTE: the focus is not on content, but on engagement by design: the continuous-engagement architecture that pushes users (in large numbers, minors with no trace of a prefrontal cortex in place—endless scrolling, especially) into automatic, perennial, and devastating consumption. Via Rosalía Anna D’Agostino.

The question is whether any social network or service crawling around the internet complies. Especially all those that copied TikTok’s formula to try to compete against this new vampire. There aren’t enough necks for so many creatures of the night.

And all the videogames built around the free to play, freemium, or assorted micropayment business model. Epic, knowing the topic from its Fortnite, gets hit again for a million and change over “addictive patterns”. Let’s not forget the AEPD doc on patterns of this kind, which is very on-point about quite a few of the ones used around here.

-We’d have to see who the champion DPA is, but the CNIL flexes with its almost 490 million in fines for 2025. It’s always an interesting source of documents. Especially with that full AI section they’ve got.

.- The European Commission, not content with the above, considers that Meta abuses its dominant position by blocking third-party access (including AI) to WhatsApp. Interim measures are being considered to avoid irreparable harm. The case foreshadows a new regulatory front: interoperability as a competitive requirement for AI ecosystems, not just messaging or little-back-yards-I-MEAN markets of Apple / Android. Via Thomas Höppner.

.- Almost at the final (Helm) horn, the EDPS and the EDPB put out a joint opinion on the Digital Omnibus changes to the GDPR. Short version in the press release/post.

📖 High density docs for data junkies ☕️

.- And more Meta, buying time on its possible €225 million fine. The one where the EDPB stepped in with a binding decision to force the Irish authority to raise the fine. The time it gains lines up with the CJEU determining that this type of EDPB decision is open to appeals. In this case, Meta’s appeal is accepted, the General Court’s order is annulled, and the ball is sent back so it can rule on the merits of the GDPR breach. Press release and ruling.

.- Google Chromebooks in Danish schools: illegal but unpunished. The Danish DPA decision is the kind of thing that makes you want to, I don’t know, get a shot in the very tip of the actual [they take it away]. After years of investigation, they conclude that the use of Google Workspace in schools is “probably illegal”, but they close the case without sanctions. The text finds serious breaches (international transfers, lack of control over sub-processors, deficient DPIA) with no practical consequences. Of course, any resemblance to the situation in classrooms in any Spanish autonomous community (squatted by Google and Microsoft) is pure coincidence. As an example: Andalusia.

.- Interesting and practical post (short and to the point, how we like it here) about that ICO guidance on pseudonymization and transfers. The ICO clarifies that pseudonymized data is still personal for joint controllers and processors, but may stop being so for independent third parties without access to the key.

💀Death by Meme🤣

🤖NoRobots.txt ot AI Stuff

.- Deepfake Pornography is Resilient to Regulatory and Platform Shocks It’s possible that the conclusions of this paper by Alejandro Cuevas and Manoel Horta Ribeiro corroborate something we said earlier: These results indicate that the compound intervention did not suppress SNCEI (pornographic deepfakes) activity overall but instead coincided with its redistribution across platforms, with substantial heterogeneity in timing and magnitude. Together, our findings suggest that deplatforming and regulatory signals alone may shift where and when SNCEI is produced and shared, rather than reducing its prevalence.

.- Long and extra, but accessible post by Nazir about tools to measure brand visibility in AI and LLM outputs. It focuses on prompt tracking as a new SEO metric. These tools redefine what we used to understand as “audience”: no longer human users, but probabilistic models that mediate access to information.

Brandon Sanderson via Guido van Rossum via Simon Willison.

📄Paper of the week

.- Having parents “validate” with their consent the collection and processing of their children’s personal data is a deranged approach in itself for many reasons: (i) That “take-it-or-leave-it” consent is tailored to platforms: not to the authorizing parents and certainly not to minors. (ii) I know few parents who even half understand how big platforms process their data. I know many more who engage in mindless, cringe sharenting. (iii) Kids today have a very unique and different concern for their privacy compared to ours: we have more life experience, but they have specific knowledge. However, nobody listens to them.

Some of these ideas, at length and clearly, much better written in Rethinking youth privacy by Danielle Citron and Ari Ezra Waldman.

🔗Useful tools

.- Oliver Schmidt-Prietz has perfectly understood that the added value has stopped being in the model, and has shifted to expert translation of law into reproducible operational flows, shifting power from vendors to practitioners. We’ll be watching closely for the publication of that GDPR plugin on github.

.- Bilal Ghafoor has prepared (and sends for free by DM on lkd upon request) a curious and detailed excel template that lets you track data subject access rights requests, with tracking of execution, response, and maximum deadline.

.- We do our own AI-shenanigans too, of course: as a sample a very cheeky legal report on OpenClaw.

🙄 Da - Ta - Dum Bass!!!

Pure horror-movie cinema: the now-famous Amazon Ring ad at the Super Bowl

If you think someone might like—or even find this newsletter useful—feel free to forward it.

If you miss any document, comment, or bit of nonsense that clearly should have been included in this week’s Zero Party Data, write to us or leave a comment and we’ll consider it for the next edition.