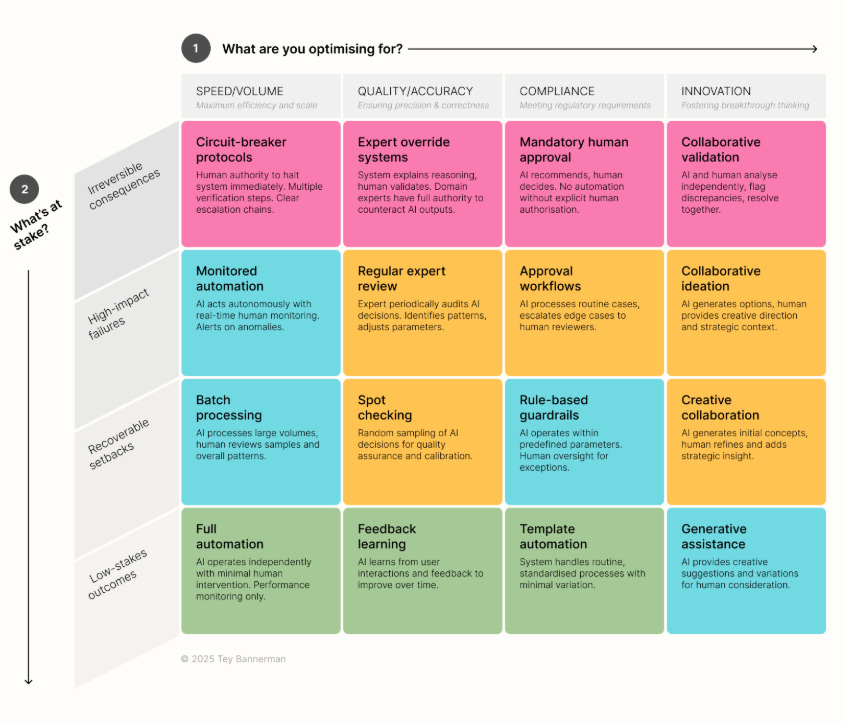

I discovered this framework by Tey Bannerman to practically land that oh-so-cute topic of “human in the loop” in AI tools, and I fell in love with it:

It’s really hard for me to abstract in this way to achieve such a precise, practical, and useful visualization.

However, from a legal point of view, it’s not exactly correct: the GDPR already applies as soon as automated decisions have “significant or legal effects,” and this factor means that many more boxes should be pink.

Would I be able to create an infographic as good as this one, but scrupulously correct from a legal point of view?

Not in a hundred years.

Could I create a semi-joking, semi-serious version—house style—with documented examples and others that are easily recognizable, to point out and explain the problems of “human in the loop” and effective human oversight, and the work it takes to comply with the AI Act and GDPR?

Here you go.

We called it [Minority Report] because it aims to shine a light on all those issues nobody talks about, and yet you see them everywhere.

We’re so insistent, we’ll be explaining every quadrant with full detail and examples.

Grab a pack of tissues and a coffee before you start.

You are reading ZERO PARTY DATA. The newsletter on tech news from the perspective of data protection law and AI by Jorge García Herrero and Darío López Rincón.

In the spare time this newsletter leaves us, we like to solve complicated issues in personal data protection and artificial intelligence. If you have any of those, wave at us. Or contact us by email at jgh(at)jorgegarciaherrero.com

Thanks for reading Zero Party Data! Sign up!

Level 1: PROHIBITED PURPOSES (Art. 5 AIA)

Quadrant 1.1: Prohibited + I Want to Comply with AI Act “I WANT TO COMPLY”

“Potato-potahto” or “We comply except for a few things”

The system exists and does not exist at the same time. Officially, you don’t do any of this because it’s forbidden. De facto, you do it, but you call it something cuter.

Any resemblance to reality is pure coincidence:

“It’s not social scoring, it’s a ‘comprehensive citizen conduct evaluation system’ for optimizing public services.”

“It’s not subliminal manipulation, it’s ‘choice architecture based on behavioral science.’”

Oversight:

An ethics committee meets quarterly to confirm that “technically” you’re not doing the forbidden thing because you’ve renamed it. Minutes in PDF. Only Otter.ai pays attention to these minutes: it summarizes them so no one reads them and records them for its own purposes.

Quadrant 1.2: Prohibited + I Want to Comply with GDPR

“The Magic Consent”

If you get the Data Subject’s explicit consent, is it still prohibited? Spoiler: yes, but you keep it up, José Luis.

Any resemblance to reality is pure coincidence:

47-page cookie pop-up including clause 23.5.b: “You consent to the use of emotional inference systems based on facial analysis to personalize your experience”

“I have read and accept that my behavior on social networks may be used to calculate my credit trustworthiness score” ← 0.02% of users read this

Oversight:

A script generated with Claude Code checks that the acceptance box is not pre-checked. “My work here is done.”

Quadrant 1.3: Prohibited + I Don’t Want to Comply with Anything

“YOLO Compliance”

Oversight? What for? If we get fined, either insurance pays or (more likely) we put it in OPEX.

Any resemblance to reality is pure coincidence:

.- Clearview AI scrapes the entire Internet to train (and run) its facial recognition tool: “Oversight? Our human supervisor is the CEO. He approves everything” (Fined €20M by the Italian Garante).

“We operate from outside the EU, so your regulations don’t apply to us.” Narrator voiceover: “Yes, they did, darling”.

Oversight:

The legal intern reviews Jira tickets when they have time left over. Spoiler: they never have time left over.

Level 2: HIGH-RISK PURPOSES (Annex III of the AIA)

Systems for hiring, credit, education, essential services, law enforcement, permitted biometrics, worker management, etc.

Quadrant 2.1: High Risk + I Want to Comply with AI Act

“The Impenetrable Compliance Checklist”

You comply with Art. 14 of the AIA to the letter. On paper. In practice, it’s another story.

Art. 14 Requirements:

· Competence to understand the system’s capabilities and limitations, to detect one’s own biases (e.g., automation bias) and in the system, to correctly interpret its outputs.

· Authority not to use the system; to annul or reverse the output; to interrupt its operation.

Real example:

Amazon’s automated hiring system (2014-2018):

The impenetrable checklist: “Human supervisors review AI recommendations”

In practice: The system discriminated against all female applicants for technical positions. Nice.

Supervisors never detected it.

Was the technology crappy? Not at all! The system learned to infer the candidate’s gender by detecting words men use more than women, to weed out the latter.

The main problem was that the training dataset included years and years of active discrimination by Amazon’s human recruiters: the model simply learned to do the same thing more efficiently, perpetuating the bias.

Effective oversight: Amazon abandons the project in 2017 when they couldn’t guarantee it wouldn’t find other discriminatory patterns.

No, in case you’re wondering, this doesn’t count as an example of a “kill switch”.

Lesson learned: A human supervisor can “review” without “understanding” for four consecutive years. And they will, if you don’t provide the proper training.

Oversight implemented (any resemblance to reality is coincidental):

Training to avoid confirmation bias: Mandatory 45-slide PowerPoint. Post-test with 10 questions. Pass rate: 100% (explanation: they could retake the test infinitely. And they did. Oh yes, they did).

Override mechanism (reversing the automated decision): The “Reverse AI recommendation” option requires (i) a 200-word written justification, plus (ii) supervisor approval, and (iii) a Jira ticket.

Result: Override rate: 0.03%. A success?? If this happens in your organization, someone should ask themselves some questions. And maybe that someone is you.

If you’re in this quadrant, call us. We’ll be happy to assist.

Quadrant 2.2: High risk + I want to comply with GDPR

“Rubber-stamping Oversight”

“The Avengers had a Hulk. We had a brilliant idea instead:” put José Luis in the loop: a supervisor who’s a total badass, right? Problem solved.

Article 22 GDPR prohibits decisions “based solely on automated processing.”

Your solution: add a human finger clicking “Approve” like there’s no tomorrow.

Technically, it’s no longer “solely automated.” Judges see it differently, but you can write whatever you want on paper… until the first complaint lands.

The plot twist: the Schufa judgment of the CJEU (C-634/21, 2023)

The case: Schufa sells a credit risk scoring service to banks. A bank, following Schufa’s score, denied credit to a client.

The client sued Schufa and the bank.

Schufa said: “I don’t make decisions, I just make proposals to the banks.”

The bank said: “I don’t make automated decisions, I take Schufa’s proposals and Johannes Luisen reviews them.” “Can’t you see this giant rubber stamp?“

The Court of Justice of the EU said: If the bank “draws strongly“ from the score, it’s an automated decision prohibited by Article 22 GDPR. Johannes Luisen’s rubber stamp does not count as “human review” if he systematically rubber-stamps the automated proposal.

Examples of (fictional) decision flows: This never happens in practice, obviously.

Case 1 - Credit scoring:

The AI generates a credit score.

José Luis steps in: Reads the score (78/100). Checks declared income. Reviews in 30 seconds. Approves/rejects. Good job, mate.

The invisible bias: According to a 2021 Stanford University study, AIs are between 5% and 10% less accurate specifically for lower-income brackets and minorities (vs higher-income and WASP individuals). José Luis knows nothing about this if he hasn’t been trained on the strengths and weaknesses of the system he’s supposed to review.

One-liner comment in the PIA: “Decisive human oversight in accordance with Art. 22 GDPR.” (Chest-bump showdown between the DPO and the Compliance Director, Villi Manilli style. Nah, just kidding: they’re the same person.)

Case 2 – Deliveroo and Foodinho (fined by the Garante for 2.5 and 2.6 million respectively):

An algorithm assigns shifts to riders based on a performance score.

The managers (Giuseppe Luigi and his amici) can modify the assignments.

In reality, the system handles 8,000 riders. The amici have neither the time nor the information to actually question anything.

Italian Garante: There’s a glaring absence of systematic review of the accuracy and quality of system decisions (output), as well as the relevance of the data (input) used to generate them. Also: the alleged contractual necessity didn’t apply (it must be objectively necessary: remember this).

Implemented oversight (any resemblance to reality is purely coincidental):

Interface design: The AI makes a recommendation. Jose Luis sees the recommendation on a giant screen. Sees a big green “Approve” button. Sees a red “Reject” button. Clicking it brings up a menu with 15 possible reasons and a free-text field for 300 words, just like before.

Relevant KPIs:

What actually counts in the company: “Decisions processed per hour.”

What the DPO suggests for the PIA: “% decision accuracy” or “Rate of bias detection in the system.”

What’s really documented: none of the above.

The lethal legal trifecta:

Automation bias + compliant interface design + perverse KPIs = industrial-scale rubber stamping.

If you’re in this quadrant (and want to improve!), call us. We’ll be happy to help.

Quadrant 2.3: High risk + I don’t want to comply with anything

“Schrödinger’s Supervisor”

You outsource the compliance of your high-risk AI system. Technically, they guarantee you “human oversight” and that it won’t be “José Luis, the NPC.”

And indeed: your Supervisor works in Bangalore. “Reviews” 300 cases/hour on a 13-inch screen. Doesn’t speak your local language. Has zero context. Zero training. But hey! He´s human.

Documented real-life examples

The Dutch ChildCare scandal (2013-2021):

The SyRI algorithm, used by the Dutch Tax Agency and Customs Authority, erroneously detected fraud in subsidies for child care in vulnerable families.

The data triggering the automated “alarm”: “Foreign-sounding surnames” + “dual nationality” = high fraud risk.

Supervisors only intervened in cases labeled “high fraud risk.” In practice, they rubber-stamped the vast majority.

Result: Over 26,000 families were required to repay the aid (which, remember, was received for being at risk of social exclusion). The amounts exceeded tens of thousands of euros per family, with no possibility of payment plans.

More than 1,100 children were separated from their families and placed under state custody.

The Dutch government resigned in January 2021 due to the scandal. The president, Mark Rutte leads NATO nowadays.

Fun-fact: The lawyer who blew up this whole mess wasn’t Erin Brokovich, but Eva González Pérez: she’s Spanish.

Ah, the irony! In the year of our Lord 2025, a government formed by the same parties is promoting the implementation of another system (you’d never guess the name: it’s called “Super-SyRI”) that aims to combine data from different agencies to prevent and combat serious crimes. Good luck with that.

Snitch governance on Instagram/Threads (October 2024):

Meta moderates content using AI. Human moderators review when in doubt.

What did the PIA say? “Human moderators make decisions with lots of context.”

Yes But: One of the tools crashed and stopped showing context to moderators. Tons of users lost access to their accounts, tons of posts were deleted for no reason.

What did Meta do? Unsurprisingly, blamed the human moderators for making decisions without enough context.

Moral crumple zone

The act of dumping the blame on the human supervisor when the AI system fails even has a name: “Moral crumple zone” (Author: Madeleine Clare Elish).

My free translation:

The emphasis on human oversight as a safeguard allows governments and AI vendors to do two incompatible things at the same time: (i) promote AI—because its capabilities surpass those of mere mortals—while (ii) taking AI and its provider off the hook for accountability, putting the weight on the supposed safety offered by human supervision—on paper, at least.

Level 3: SIGNIFICANT OR LEGAL EFFECTS ON PEOPLE (GDPR Art. 22)

Systems that are not high-risk under the AI Act, but have legal or significantly similar effects under GDPR.

Quadrant 3.1: Significant effects + I want to comply with the AI Act

Type of oversight: “Overkill Compliance”

You apply high-risk requirements from the AI Act to a system that isn’t high-risk.

Your lawyer is chest-puffing. Your CFO is crying over the costs. Your tech team doesn’t know what to think.

Now this is true science fiction:

TV streaming content recommendation system: You apply Article 14 AI Act, full equipment, with DPIA, conformity assessment. Everything. Even a FRIA.

In practice: It’s probably a low-risk purpose under the AI Act, but it could have “significant effects” under the GDPR—Recital 71. Yeah, it’s a stretch.

Result: You’re the compliance unicorn. You get invited to all the conferences. You’re fired in December.

Implemented oversight:

Human oversight committee with 7 members

Weekly meetings

Real-time dashboard of oversight metrics

Exhaustive documentation of every “override”

Annual budget: €450,000

System: Movie recommendations

Actual risk: Low

Virtue signalling: Top

Don’t call us if you’re here. In fact, there’s no one here.

Quadrant 3.2: Significant effects + I want to comply with GDPR

“The G-spot of compliance”

This is the sweet spot of realistic compliance. You apply Article 22 GDPR to the letter, implement whatever safeguards make sense, document everything, and pray that no Data Subject files a complaint.

Any resemblance to reality is purely coincidental:

Typical average company case:

Your clients interact with an AI chatbot (these days, an AI Agent) that has access to all their data.

Oversight: a large team of supervisors consisting of two people, who review decisions and handle complaints.

In practice: 0.001% of clients request a review. 0% of decisions are changed because “Who am I to go against this super expensive AI?”

One-line comment in the PIA: “Decisive human oversight procedure in accordance with Article 22 GDPR.” ✅

Real compliance: 🤷 Nobody uses it, nobody tests it.

Oversight actually implemented:

Section 56 of your privacy policy explains “how our algorithm works” in 1,800 words full of generalities.

Complaint process: Email to compliance@company.com. Automated reply: “We have received your request: it will be reviewed within 30 days.”

In practice: Junior analyst studies the case for five minutes. Has no information about how the system works. Looks at the output. Looks at the input. Rejects the appeal by hitting a big green button.

Rate of decisions reversed after appeal: not recorded.

“Technically compliant.”

If you’re in this quadrant, call us. We’ll be happy to help.

Quadrant 3.3: Significant effects + I don’t want to comply with anything

Type of oversight: “Move Fast and Break Rights”

If nothing happens to Microsoft, Amazon, Apple, Meta, Google, OpenAI, Anthropic... why should I invest a penny in compliance? Besides, they’re just going to put obstacles in my way...

“We conquer territory first,” and then the paper-pusher can come do the paperwork if the Spanish DPA knocks on the door.

Documented real-life examples

Wells Fargo’s dodgy mortgage approval system (2022):

The system gave higher risk scores to Black and Latino applicants than to white applicants with similar finances.

Human review of automated decisions: Human supervisors “reviewed” applications. The problem is, if the system is biased and supervisors don’t challenge its decisions, the bias just continues (see “Moral crumple zone” above).

Did anyone ever think to measure approval rate disparities by race? Yes, but only when the authorities launched their investigation.

AI Agents tsunami (2025):

All kinds of companies deploying AI agents for customer service, programming, different kinds of analysis...

A single line in the PIA: “Human in the loop for decisions with significant effects.”

In practice: What’s the definition of “decisions with significant effects”? It depends. At best, oversight consists of post-facto review of a tiny percentage of cases.

Training case: The AI at a Taco Bell (a drive-through for cars) accepted—without, uh, blinking—an order for 18,000 bottles of water.

Implemented oversight:

Monitoring dashboard with technical metrics (latency, throughput, error rate)

No metrics on impact for data subjects

“Human oversight” = an engineer jumps in on demand when the system breaks.

The system learns from its mistakes. Mistakes are features, not bugs. Data Subjects are unpaid beta testers.