Is the user responsible for Grok’s ‘deepfake bikinis’, or is it X.com?

Or how to master Grok-vernance in three terrible lessons

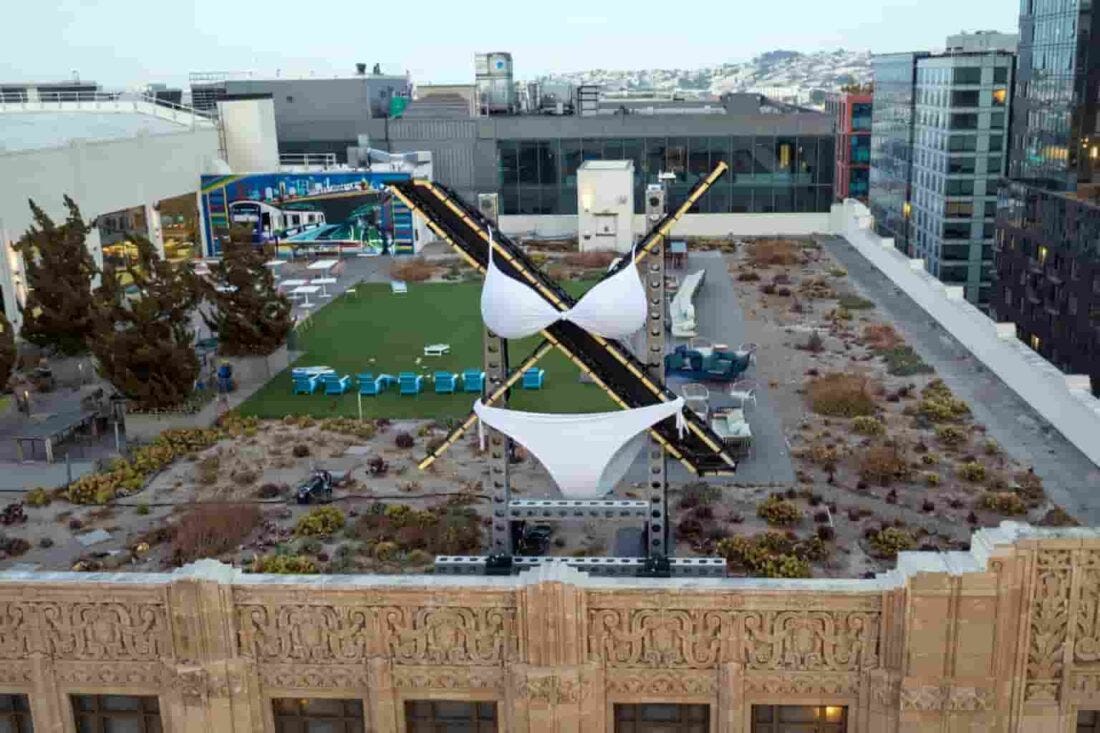

Quick notes on the Grok scandal exposing / putting everyone in a bikini (to no one’s surprise, almost all of them are women), including many underage girls, like the girl from Stranger Things.

The nonsense I intend to refute (if you’re not interested in data protection matters, skip straight to number 3):

1.- “Legal responsibility always lies with the user”

2.- “Intermediaries are exempt from liability”

3.- “Grok only does what the user commands”

For the first time in my life, I won’t talk about “Twitter” but about “X.com”.

I was very happy on that platform and I resisted using the X house brand of Mr. Ketamine, but this matter is specific and particular to the new hideousyncrasy of the house.

So X.com it is for today.

You are reading ZERO PARTY DATA. The newsletter about current technology from the perspective of data protection law and AI by Jorge García Herrero and Darío López Rincón.

In the spare time left by this newsletter, we like to solve complicated issues in personal data protection and artificial intelligence. If you have any of those, give us a little wave. Or contact us by email at jgh(at)jorgegarciaherrero.com

Let’s get to it:

1.- “All responsibility lies with the user”

As we explained in detail not even six months ago and with this very same example of technology used to undress the neighbor, what Musk or X.com says is one thing and what the regulations and courts say is another.

1.1.- Domestic exception:

It’s true:

When the user processes data within their domestic sphere and does not publish it, the GDPR (the personal data protection regulation) does not apply to the processing.

On the internet, there are platforms that offer models or instances of AI specifically tuned to generate sexual videos of your “favorite actress” and detailed instructions to train them with the photos of whoever you want.

It’s true:

As long as the outputs or resulting images are not published by users, the GDPR has nothing to say.

BUT it’s a half-truth that actually means the opposite of what Elmo Musk claims:

The domestic exception means that the GDPR does not apply to the user.

But the GDPR still applies to the platform (X.com) that provides the means related to such personal or domestic activities.

What set off all the alarms was the ability for users to bring @Grok into a (public) thread, graciously ask it to undress or put in a bikini whoever, and for @Grok to then publish the result, to everyone’s amusement—except, obviously, the person involved (usually a woman).

The key is the automatic publication of these gracious requests to @Grok.

And that’s why X.com’s reaction was to restrict the ability to generate naughty stuff to paying users and in private.

I’m not making this up: it’s the literal wording of the last sentence of Recital 18 of the GDPR.

“However, this Regulation applies to controllers or processors who provide the means for processing personal data related to such personal or domestic activities.”

The CNIL—the French data protection authority—interprets that this responsibility can only be avoided by the provider when two requirements are met.

(i) .- The processing is initiated at the data subject’s request (in this case, the owner of the mobile), and carried out under their control and on their behalf;

(ii) .- The processing is carried out in a separate environment, that is, without any possibility of intervention by the provider or any third party over these data: the provider has provided the means for data processing, but cannot access or act upon them.

As you can see, neither of these two requirements is met.

And after the announced measures, the second requirement still isn’t met: stay with me.

1.2.- In conclusion: the GDPR does apply to X.com.

X.com would have to verify if, in light of the type of processing the user intends to carry out (a deepfake of a recognizable photo of a person with some nudity element that will be published on the social network immediately afterward) it has a legal basis—something that’s obvious if it’s the user’s own image, but not so obvious if it’s someone else’s (a third party’s) data.

Traditionally, X.com might have responded that “until other users report published content as unlawful, I don’t know anything and I’m not responsible.”

This claim has two problems—not small ones: the DSA, which I won’t get into for space reasons, and the recent Russmedia judgment by the CJEU.

2.- “Intermediaries are exempt from liability”: The Russmedia Judgment

Precisely, the CJEU published a groundbreaking ruling not even two months ago (Russmedia, also covered in this blessed newsletter) on the qualified obligation to review processing involving the publication of content with sensitive third-party data:

The Russmedia ruling, adapted to the case of X.com, would mean the obligation to:

Identify posts (in this case) containing sensitive data

Verify if the user who is about to post is the person whose sensitive data they intend to publish (that is: not necessarily to verify the user’s identity, but almost), and if not,

Deny its publication, unless the user can prove that the real data subject—the person in the image—has given explicit consent or another circumstance of Article 9.2 GDPR applies (that is: deny its publication).

Note that I’m not saying this is easy to comply with, I’m just explaining why “all responsibility lies with the user” is a self-serving delulu nonsense.

I’m also not getting into the application of civil law (right to honor, privacy, own image) or criminal law for synthetic images of minors in a lewd attitude (CSAM).

All of this, of course, applies in both the public and domestic spheres.

That proof is harder in the second context does not mitigate the unlawfulness of the activity.

3.- “Grok only does what the user commands”

A generative AI model works within a system. And what the system does is decided by those responsible for it.

This (surprising) must-read article from the Financial Times says everything that needs to be said about these people.

It’s the Grok-vernance of X.com.

3.1.- Models don’t arise spontaneously: they are trained with something and for something

From the moment the user enters a prompt until that prompt reaches Grok, a lot happens. And from the moment Grok returns its “output” until it reaches the user, even more happens.

To begin with, a million decisions are made by those responsible before training a generative AI model (most notably here, determining and filtering the training dataset, the purpose, and the model’s fine-tuning), as we explained here.

3.2.- Meta prompt

Then, every model (the best-known commercial ones and the local ones we download and run on our computers) are substantially tunable through a “system prompt”.

Well, the meta prompt is authored by X.com (or Elon) and Grok primarily does what X.com (or Elon) whispers in its ear, not what you whisper to it.

Grok stands out from the competition because its system prompt (which you can see here) is especially lax. It even made the news that this prompt specifies that “the fact that the user refers to something related to ‘teenage’ and ‘girl’ does not mean she is underage.”

Draw your own conclusions about the intention behind this system instruction.

And judge for yourselves whether X.com is free from all blame in this.

3.3.- Shadow prompting and ex post filtering

Any AI system filters both user prompts before they reach the system (to prevent it from answering abominable things, or being manipulated by prompt injection for security reasons…) and model outputs before they reach the user (in case the training or previous safeguards weren’t enough and the model answers inappropriately…).

In fact, huge efforts have always been made to develop tools to detect and filter CSAM content due to the seriousness of this crime and the widespread awareness to detect this type of criminal.

The fact that Grok has instantly become famous for generating this kind of content makes it very clear that other providers have blocked their systems to prevent it.

As I said before, the ability to generate sexualized and personalized deepfakes on demand is usually common in open self-managed models, not conventional commercial models.

Is there a legal answer to this problem? I think there is, but this is already too long: we’ll leave it for next week.

Enjoy the weekend and shed those extra pounds: yes you can!

Jorge García Herrero

Lawyer and Data Protection Officer