Two years ago, I read a book that helped me understand many things.

One of them answers two questions that, once understood, are essentially the same:

Where should I start automating?

In which parts of my organization will I encounter the most resistance to implementing new automated AI processes?

The book is Power and Prediction, a fabulous work in its simplicity and foresight, as it was published before the current AI boom and yet anticipates much of its impact on complex organizations.

The concept that captivated me is something I like to explain as the “anatomy of an automated decision,” as a nod to Otto Preminger’s film.

The explanation applies equally to traditional algorithms and modern artificial intelligences.

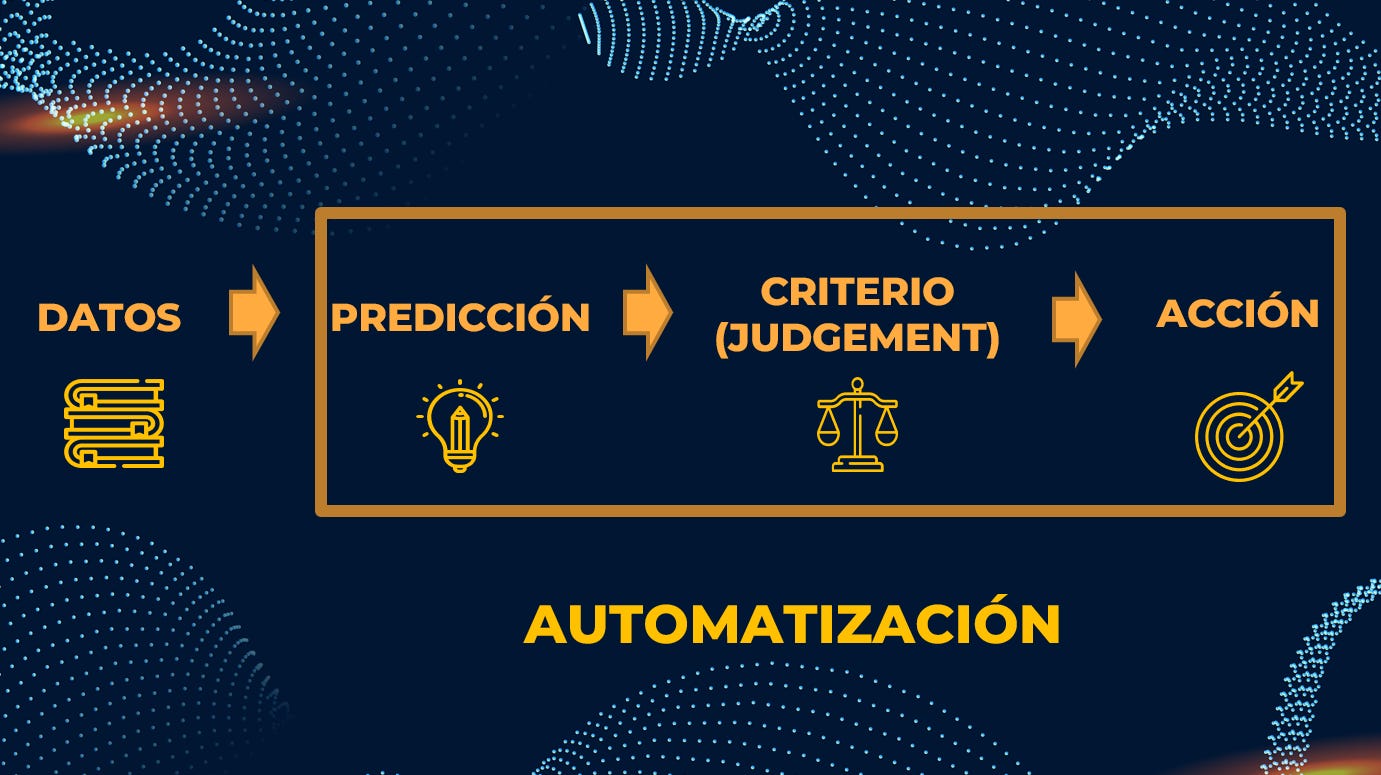

The elements are always the same:

the data or databases on which the prediction is based,

a “prediction,”

the judgment used to make a decision based on the prediction, which in turn is based on the available data, and

the action triggered by the decision.

The interesting parts are the two elements in the middle: prediction and judgment.

Estás leyendo ZERO PARTY DATA. La newsletter sobre actualidad, tecnopolios y derecho de Jorge García Herrero y Darío López Rincón.

En los ratos libres que nos deja esta newsletter, nos gusta resolver movidas complicadas en protección de datos personales. Si tienes de alguna de esas, haznos así con la manita. O contáctanos por correo en jgh(arroba)jorgegarciaherrero.com

¡Gracias por leer Zero Party Data! Apúntate!

How were decisions made before the arrival of automated decision-making algorithms?

Until now, the standard was as follows: the same person (aka: the “boss of it all”)

(i) assessed the facts,

(ii) made their own prediction, and

(iii) applied their judgement to make the decision. In plain terms, “they did whatever they felt like.”

The slipperiest element is “judgement,” which in this context means the ability to make the best decision by weighing the pros and cons of the available options.

This is better understood with the example of weather forecasting.

The weather guy explains “judgement”

Well, let’s imagine the weather guy says there’s an 80% chance of rain tomorrow.

The first interesting thing is that his prediction is not deterministic, but probabilistic: he doesn’t say it will definitely rain or not, nor does he state it’s certain either way.

He says there’s an 80% chance of rain. That means that if there were 100 mornings like tomorrow, it would rain on 80 of them and not on the other 20.

And based on that prediction, we have to make a decision.

Either we take an umbrella, accepting that it might not rain, and we’ll have to carry it around all day,

Or we leave the umbrella at home, accepting that if it does end up raining, we’ll get wet.

In this context, comparative advantage is based on two factors:

Having the best possible predictions (including the best, fastest, and cheapest ones, as we’ll soon see).

And also: having better judgment—that is, the ability to weigh the pros and cons of each possibility and make the best decisions in light of all this.

Automation

The golden promise of AI is automation:

When we have thoroughly studied and formalized processes, broken down into segments, and we obtain predictions that are sufficiently good (and easy and cheap), for a reasonably controlled environment, it is possible to “encode” the judgment and the decision.

This is the ideal setting for automation.

In the coming weeks, we’ll discuss some legal issues that, for whatever reason, no seller of “AI agents”—just to give a simple (but ubiquitous) example—ever mentions.

But today we’ll stay on this theoretical level.

What happens when AI cannot automate 100%?

In these cases, AI produces a very different effect: (i) what Agrawal calls “power shifts,” and (ii) fierce resistance.

Power shifts? What’s that?

Remember we previously said that prediction, judgment, and decision seemed hard to distinguish because all three occurred inside the same head.

Now, the source that generates predictions and the entity that makes final decisions based on judgment are being separated.

Two examples: taxi drivers and radiologists

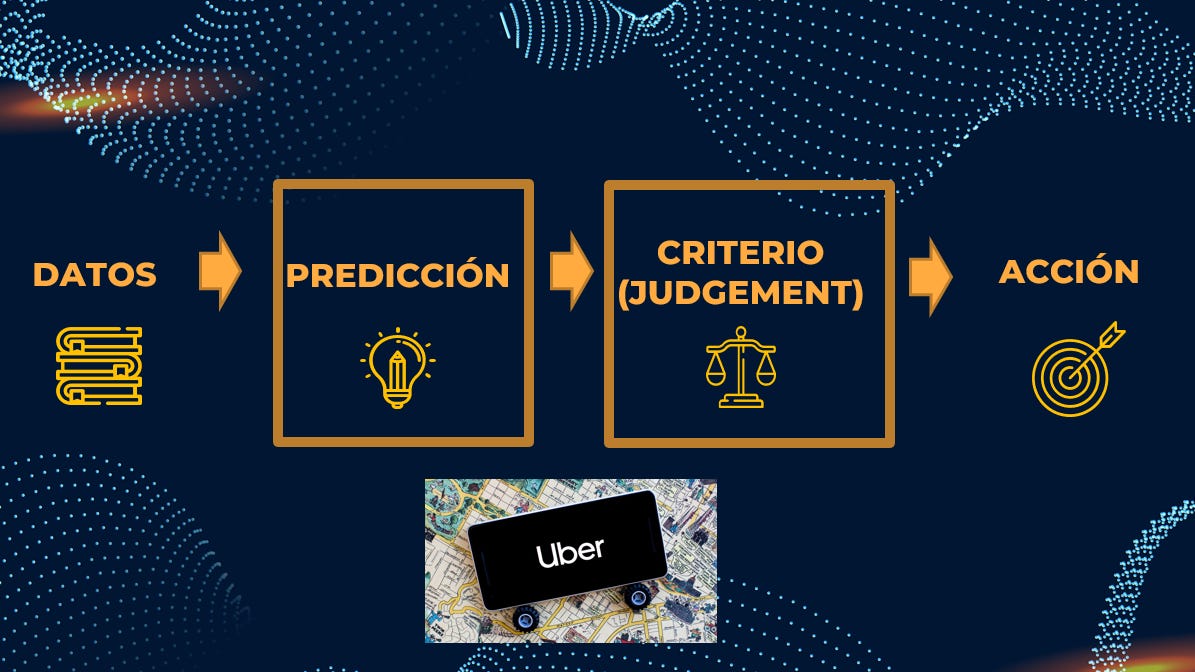

Taxi drivers

Previously, taxi drivers held a monopoly over their profession—leaving aside licensing and administrative issues—because they had a monopoly on the knowledge of where streets were in each city and which route was shortest or easiest to get from one place to another.

The taxi driver made the prediction, applied judgment, and executed (in other words, took you to your destination).

GPS-based navigation systems have democratized the profession. It’s not that taxi drivers suddenly became idiots—it’s that any regular person with a GPS or a smartphone, supported by instant, accurate, and cheap predictions, can now compete with a taxi driver: now the GPS makes the prediction, and anyone can follow its directions.

Radiologists

A more complex example is that of neural networks analyzing medical images.

Neural networks are famously capable of interpreting medical images, reportedly outperforming any radiologist.

We’ve often heard that radiologists were going to disappear because a neural network can examine hundreds or thousands of patterns in seconds, whereas human radiologists can manage far fewer in their heads. Moreover, they are affected by proximity bias—that is, influenced by the most recent relevant cases they’ve analyzed.

In practice, what happens is that neural networks are very accurate, reliable (and possibly not very cheap) in predicting when a patient does not have cancer.

But when the likelihood of the opposite is significant, the radiologist’s judgment becomes highly valuable, because radiologists are still the only ones capable of examining and considering the specific context of the individual patient.

Resistance to change

Clusters of procedures that can be automated are falling at lightning speed. Professionals who fail to ride the wave of new technological opportunities with their expertise will be trampled by it.

In the rest of the contexts (still the vast majority, fortunately), two phenomena occur (which are manifestations of the same issue):

Mid-level managers whose decision-making authority is clearly challenged, surpassed, or at the very least threatened by AI will do everything possible to block or delay its implementation.

Workers who have successfully harnessed a useful “tamagotchi” mostly stay silent to avoid losing credit, losing the tamagotchi, or being buried in work because they’re “too clever.” Very few make their progress public or share it.

Those who lead organizations capable of sharing and capitalizing on the harmonious synthesis of human and technological resources will come out ahead in the AI game.

Jorge García Herrero

Data Protection Officer